Data Decentralization

Aligning Strategy, Ownership, and Enterprise Scale

June 1, 2025 - 10 min read

In today’s data-driven world, the pressure on IT leaders has never been greater. Businesses across all industries—not just tech—are racing to become more agile, more integrated, and more scalable. But here’s the catch: the more systems we connect, the more complexity we create. And when every team relies on clean, current, and contextual data to make decisions, the old model of centralized data control starts to show its cracks.

Over the years, I’ve worked with organizations that have worked hard to centralize every application, report, and process into a single source of truth. It sounds good in theory, but in practice, it often slows everyone down. Subject matter experts (SMEs) in finance, operations, or quality end up waiting in line for IT to make updates or fix something they could’ve managed themselves. What starts as a quest for control turns into a bottleneck.

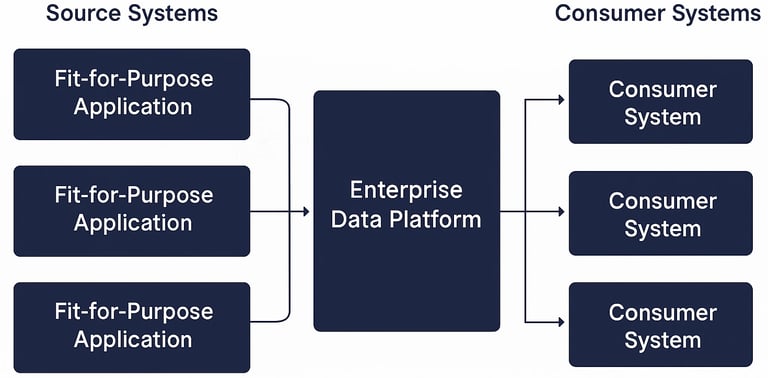

There’s a better approach. It starts by letting go of the idea that one system to rule them all is the goal. Instead, we shift to a decentralized model, where each application serves as a “fit-for-purpose” system, owned by the team that knows it best. Then, we build a modern Enterprise Data Platform (EDP) that connects those systems, integrates their data, and enables collaboration across the organization.

This article is about that shift, from rigid centralization to smart decentralization, and how aligning your data architecture with your business strategy can unlock real agility, scalability, and trust in the data.

Why Centralized Data Architectures No Longer Scale

The idea of a centralized data architecture once made perfect sense. Build one system, or a tightly controlled data warehouse, that governs everything, and you get consistency, control, and a single version of the truth. It worked for a while, especially when systems were fewer and business models more static.

However, modern enterprises don’t operate in a static environment anymore. Teams move fast. Products evolve. Regulatory expectations shift. Customers want more transparency and responsiveness. Perhaps most disruptively, organizations acquire other organizations, each with its own systems, data models, and business processes. Trying to centralize everything in this environment is like asking every department in a city to submit requests through a single town hall; it slows things down and introduces unnecessary friction.

Here’s what typically happens:

Bottlenecks form in IT because every request, no matter how small, requires centralized processing, validation, and approval.

Context is lost when data is pulled out of the tools domain that teams actually use and understand.

Agility suffers because change requests must route through long prioritization and development cycles.

Shadow systems emerge as business teams build spreadsheets, databases, or workarounds to meet their timelines.

M&A integration slows when legacy systems from acquired entities are forced to conform to a rigid data architecture, often delaying value realization from the deal.

Even worse, a centralized model often reinforces the idea that data is owned by IT instead of stewarded by the people who generate and rely on it daily. That’s not just inefficient, it’s risky. Collaboration breaks down when teams don’t trust the data or feel disconnected from how it’s structured.

Modern organizations need an architecture that respects speed, domain expertise, and system diversity, especially in the face of mergers, acquisitions, or global expansion. That’s where decentralization, with the proper guardrails, starts to shine. It's not about chaos; it's about designing a system where the people closest to the data can move faster, while still feeding a broader enterprise vision.

In short, centralized control doesn’t scale, but decentralized coordination does.

Principles of a Decentralized Data Architecture

At the heart of a decentralized data architecture is a simple but powerful idea: the people who work with the data every day should have the most control over how it’s managed, used, and improved. Rather than routing every change through a central authority, each domain, finance, operations, R&D, quality, and commercial becomes accountable for its own data products. This shift dramatically improves both speed and relevance.

Here are the core principles I’ve seen work in practice:

Systems Are Fit-for-Purpose: Each business unit should be empowered to use systems purpose-built for its needs. For example, quality might use a validated quality management system (QMS), operations rely on a lightweight workflow tracker, and finance runs a cloud-based ERP. You don’t need to force every team into the same platform; you must respect their tools and extract what matters.

Data Is a Product: In a decentralized model, data isn’t just exhaust from operations; it’s a product with stakeholders, SLAs, and continuous improvement. Each domain becomes the product owner of their data, responsible for its accuracy, structure, and fitness for use downstream.

Enterprise Data Platform (EDP) as the Backbone: The EDP acts as the central integration and collaboration layer. It doesn’t replace domain systems; it connects them. It extracts the data, transforms it for consistency, and loads it into a structured enterprise repository. This is where cross-functional analytics, reporting, and integration happen.

Loose Coupling, Strong Standards: Decentralized doesn’t mean disconnected. You need standard data models, naming conventions, and validation rules across domains. But instead of hardwiring everything together, you create APIs and ETL pipelines that allow flexibility and resilience without sacrificing governance.

Governance by Design, Not by Control: You’re not giving up oversight; you’re redesigning how it happens. Governance is built into the process through automated checks, metadata tracking, access controls, and audit trails. The goal is not to prevent change but to make change safe and transparent.

In summary, a decentralized architecture builds trust and accountability when implemented well. It scales more naturally with the business, especially during growth events like mergers, product launches, or geographic expansion. It positions IT not as a bottleneck but as an enabler, providing the platforms, pipelines, and standards that let each domain do its best work.

It’s not about giving up control. It’s about distributing it wisely.

The Enterprise Data Platform: A Modern Integration Strategy

If decentralization is the philosophy, then the Enterprise Data Platform (EDP) is the engine that makes it work.

In a decentralized model, business domains stay in their lane, they use the tools that make them effective, focus on their core processes, and own their data. But this only works if a strong integration layer ties everything together behind the scenes. That’s where the EDP comes in.

The EDP isn’t just a data lake or a warehouse. It’s a platform strategy, a set of technologies and processes designed to Extract, Transform, and Load (ETL) data from multiple fit-for-purpose systems into a unified enterprise repository. This shared environment becomes the foundation for enterprise reporting, cross-functional analytics, forecasting, compliance dashboards, and more.

Here’s what makes a modern EDP effective:

It abstracts complexity: Rather than forcing each team to understand the structure and semantics of another department’s data, the EDP serves as a translator. It harmonizes formats, standardizes naming conventions, and resolves conflicts so downstream consumers don’t have to.

It protects upstream autonomy: Each domain continues using its own system of record, whether it’s a lab information system, CRM, MES, or ERP, without being slowed down by enterprise reporting needs. The EDP allows them to operate independently while still contributing to the greater whole.

It accelerates integration: Especially in environments where acquisitions are common, the EDP allows for faster onboarding of new systems. You don’t need to rip and replace; you can connect, transform, and integrate in a matter of weeks, not years.

It feeds enterprise-level consumers: Finance teams get consolidated actuals, forecasts, and KPIs. Executives get dashboards that pull from every corner of the business. Scientists get visibility into lab output across sites. And no one’s waiting for a six-month data warehouse overhaul to make a change.

It enforces standards at scale: Even with decentralized inputs, the EDP becomes the place where data governance, lineage, quality rules, and security policies are enforced. It’s where you track what data came from, where it was transformed, and who touched it.

In summary, the EDP isn’t just middleware; it’s the connective tissue that supports agility without sacrificing trust. It gives you the ability to scale, adapt, and analyze without bogging down your teams with integration headaches. More importantly, it turns data into a shared asset—available, understandable, and useful across the business.

When people ask how to unlock the value of decentralization without losing control, my answer is simple: You need a strong EDP strategy. Without it, you’re just managing data silos with better branding. With it, you’re building a proper digital foundation for collaboration, insight, and growth.

Strategic IT Road-mapping: Bridging Business and Technology

A decentralized data architecture works best when it’s not just a technical design but a shared vision between business and IT. That’s where strategic IT road mapping becomes essential.

Too often, IT is treated as a reactive function, tasked with supporting whatever priorities emerge from the business without being invited to shape them. The result? Disconnected projects, competing timelines, and technology investments that don’t scale or integrate well. In a decentralized model, this problem only gets worse if left unchecked.

What’s needed is a co-created roadmap, a joint effort between IT and business leadership to define priorities, set realistic expectations, and ensure that everyone is rowing in the same direction.

What Makes a Strategic IT Roadmap Work?

Business Priorities Come First: The roadmap starts with what matters most to the organization: launching a new product, improving customer satisfaction, reducing operational overhead, and enabling faster M&A integration. IT's role is to ask, What do we need to build, connect, or support to make this happen?

Dependencies Are Mapped, Not Assumed: Every initiative touches data, often multiple systems. The roadmap must account for interdependencies between teams, systems, and platforms. If quality data is needed to enable a supply chain dashboard, that linkage should be clear in both the timeline and budget.

Capacity Is Grounded in Reality: Whether it’s internal bandwidth or vendor capacity, the roadmap should reflect what’s realistically achievable. This helps avoid overpromising and underdelivering and encourages teams to focus on sequencing, not just speed.

Workshops Replace Top-Down Directives: Collaborative working sessions between IT and business units are critical. These workshops uncover pain points, clarify ownership, and align teams on what's being built and why. In these conversations, the roadmap becomes not just a list of projects, but a plan everyone believes in.

Flexibility Is Built In: Change is inevitable, whether due to an acquisition, new regulation, or a shift in market strategy. The roadmap isn’t static. It should be reviewed regularly and adjusted in a controlled way. Transparency in these changes builds trust and keeps momentum.

So, when done right, strategic road-mapping transforms IT from an order-taker to a business enabler. It ensures that decentralized systems still serve a centralized mission. It creates a space where cross-functional initiatives like building out your EDP, integrating an acquisition, or modernizing lab operations can actually move forward without stepping on each other’s toes.

This is where IT earns a seat at the strategy table; not because it pushes technology but because it solves real problems alongside its business partners.

Governance Without Bureaucracy: The Role of a TMO

As organizations decentralize their data and distribute ownership across business domains, a natural question arises: How do we stay aligned without slowing everything down? The answer lies in smart, lightweight governance, delivered through a Transformation Management Office (TMO).

Unlike a traditional PMO, which often focuses on project tracking and resource allocation, a TMO operates at the intersection of strategy, execution, and change management. It’s designed for environments where priorities shift, timelines are interdependent, and collaboration between IT and the business must be more than lip service.

A TMO isn’t a gatekeeper in decentralized environments; it’s an orchestrator.

Why a TMO Matters in a Decentralized Model

Keeps the Roadmap Aligned Across Functions: When each domain owns its systems and data, initiatives can easily drift apart. The TMO ensures that business and IT priorities stay in sync, especially across shared infrastructure like the Enterprise Data Platform.

Connects the Dots Between Strategy and Execution: It’s one thing to agree on goals in a workshop: it’s another to track them through delivery. The TMO drives accountability by maintaining visibility into what’s being built, by whom, and how it connects to broader outcomes.

Reduces Friction Without Adding Red Tape: Governance doesn’t have to mean layers of approval or bloated processes. A well-run TMO uses just enough structure, scorecards, check-ins, and working sessions to keep projects moving while still enabling flexibility and fast decision-making.

Manages Interdependencies Across Decentralized Systems: Whether you’re integrating new systems after an acquisition or coordinating multiple data streams into your EDP, the TMO ensures that timelines and handoffs are clear. This is especially valuable when multiple vendors, business units, or geographies are involved.

Provides a Home for Change Leadership: A decentralized data strategy often requires a shift in mindset, both culturally and operationally. The TMO can support communication plans, training, adoption metrics, and feedback loops that ensure new working methods take root.

In short, the TMO brings just enough centralization to keep a decentralized strategy from flying off the rails. It enables autonomy without anarchy and scale without bureaucracy.

If your organization is pursuing decentralization, but struggling to maintain cohesion, a TMO may be the missing piece. It doesn’t replace leadership, it amplifies it by aligning cross-functional teams, reinforcing strategy, and quietly removing roadblocks that get in the way of progress.

The Human Element: Driving Adoption and Trust

No matter how elegant the architecture or how well-designed the roadmap, success ultimately hinges on people. Adoption isn’t automatic, especially in decentralized models where control shifts and new responsibilities emerge. If your teams don’t trust the data, understand the purpose, or feel empowered by the change, even the best systems will fall flat.

In my experience, the human element is often the biggest risk—and the biggest opportunity.

Why People Push Back

Resistance to decentralization isn’t just fear of change. It often comes down to:

Unclear ownership – Who’s responsible for the data now?

Lack of context – Why are we doing this, and what’s in it for me?

Fear of accountability – If I own the data, am I on the hook if something goes wrong?

Tool fatigue – Another platform? Another dashboard? What’s different this time?

These concerns are valid. If ignored, they can create confusion, siloed behaviors, or worse, shadow systems that quietly undercut the entire strategy.

What Drives Adoption

To truly embrace decentralized data and enterprise-wide platforms, people need more than access; they need clarity, confidence, and support. Here’s what helps:

Co-create, not hand-off: Involve stakeholders during system selection, requirement gathering, and pilot programs early. When people help shape the solution, they’re far more likely to use it.

Make it easier to do the right thing: Don’t over-engineer workflows. If users have to jump through hoops to follow the new process, they’ll find a workaround. Design with empathy and test with real users before scaling.

Reinforce trust with visible wins: Roll out functionality in phases. Celebrate when teams gain new insights, streamline reports, or eliminate redundant data entry. These moments build momentum and reinforce that the effort is worth it.

Train for confidence, not just compliance: People need more than one-time training. Offer just-in-time guides, office hours, and real-world use cases to help them feel equipped, not overwhelmed.

Acknowledge and reward ownership: When domain experts take responsibility for their data products, recognize it. Highlight teams that improve quality, automate reporting, or simplify handoffs. Ownership becomes contagious when it’s appreciated.

Adoption is less about forcing change and more about earning trust. A decentralized strategy only works if the people closest to the data feel empowered to own it, and know they’re supported by a leadership team that values their expertise.

At the end of the day, data architecture is about humans: enabling them to work smarter, collaborate more effectively, and make better decisions. Get the human element right, and the technology tends to follow.

Closing: Decentralization as Strategic Enablement

Decentralization isn’t a shortcut, a trend, or a workaround, it’s a deliberate, strategic choice to empower the people closest to the work. When done right, it transforms data from a bottleneck into a catalyst. It respects domain expertise while still supporting enterprise collaboration. It enables teams to move faster without compromising governance or quality.

This is not about letting go of control, it’s about distributing it intelligently. With fit-for-purpose systems in place, a well-structured Enterprise Data Platform at the center, and a roadmap built in partnership between IT and business leadership, decentralization becomes the foundation for something bigger: agility, scale, and sustainable growth.

In environments where M&A is frequent, innovation is constant, and regulatory complexity is the norm, a centralized “one-system-to-rule-them-all” approach simply can’t keep up. What today’s organizations need is a balance, local autonomy paired with enterprise-wide visibility.

If there’s one lesson I’ve learned over decades of building data platforms and leading digital transformation initiatives, it’s this:

Let your domain experts use the tools that make them successful—and give your organization a shared platform that makes it all work together.

That’s what decentralization enables. That’s how you scale. And that’s how you turn data into a strategic advantage—not just a technical asset.